Executive Summary

Meet Our Panelists

Rupal Patel

Founder & CEO @ VocaliD

Rupal Patel is the founder and CEO of VocaliD, a voice AI company that creates custom voices. VocaliD’s award-winning technology empowers individuals living with speechlessness to be heard as themselves and brings things-that-talk to life through its uniquely crafted vocal persona. Rupal is currently on leave from Northeastern University where she is a tenured professor in the Khoury College of Computer Sciences and the Department of Communication Sciences and Disorders. A native of Canada, she earned her bachelor’s degree from the University of Calgary, her master’s and Ph.D. from the University of Toronto, and completed post-doctoral training at Massachusetts Institute of Technology. One of Fast Company’s 100 Most Creative people in Business, Rupal has been featured on TED, NPR, and in major international news and technology publications.

Doug Young

Principal Software Architect @ Bose

Doug Young is a Principal Software Architect for Bose's consumer wearables products. He has been leading software development of Bose's Bluetooth headphone and wearable products for 8 years and led the teams that integrated Google Assistant and Alexa voice assistant interfaces into Bose products.

Lisa Falkson

Senior VUI Designer @ Amazon

Lisa Falkson is currently Senior VUI Designer at Amazon. She is the editor and author of “Ubiquitous Voice: Essays from the Field” and has over 15 years of industry and research experience, specializing in the design, development and deployment of natural speech and multimodal interfaces. Her most recent work includes next-generation voice user interfaces at NIO and CloudCar, as well as Amazon’s first speech-enabled products: Fire TV, Fire Phone and Echo/Alexa. Prior to that, she focused on early iPad/iPhone interfaces at Volio, and speech-enabled IVR applications at Nuance. Lisa holds a BSEE from Stanford, and an MSEE from UCLA.

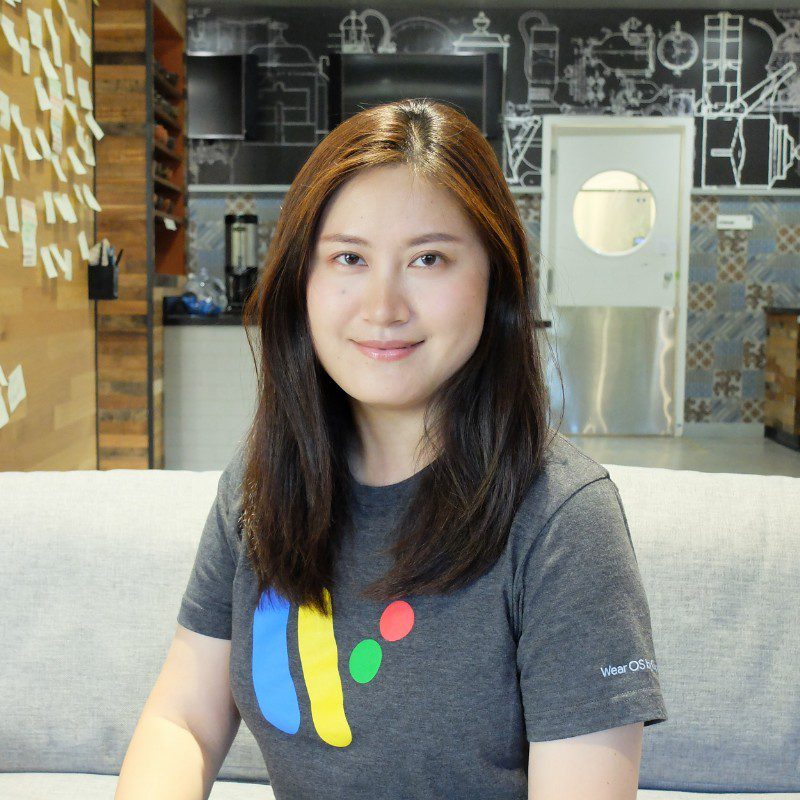

Jessy Xia

Senior Interaction Designer @ Google

Jessy Xia is a passionate designer, who has extensive experience working on all aspects of wearable tech design. In her role as Senior Interaction Designer @ Google, she helps shape the user experience and design of Google’s wearable product system. Previously she also led design efforts at Fitbit and SoFi. Jessy graduated with an MFA in Computer Arts and New Media from the Academy of Art University.

Meet Our Moderator

Rebecca Evanhoe

Assistant Professor @ Pratt University

Rebecca Evanhoe, author and conversation designer, has been developing technology that you talk to since 2011. Her book about conversation design, co-authored with Diana Deibel, will be published in 2021 by Rosenfeld Media. She teaches conversation design as a visiting assistant professor at Pratt Institute.

Watch The Event Again

Event Q&A

What are some of the top use cases for conversational AI on the go, and in the wearable space today?

Jessy: So when I think about the top uses top use cases for on the go, maybe I am thinking about less than physical locations. So for example, if you're home versus you're not at home, I intend to think about it as from the following three buckets. The first one will be when the user is on the move, I think that's one of the obvious buckets, the user is moving from point A to point B, either in the comfort of the inside of the vehicle no use voice experiences with car voice experiences or they can be walking down the street or biking from point A to point B, and use voice interactions with their wearable and the hearable devices that are on their body. And the second part is, I like to think about it could be when the user is on their feet. So they might not actually need to leave somewhere. So they could still be at home or at work, especially in the current situation, I think you were maybe spending 95% of the time at home. But there is still a lot of opportunities for on the go use cases at home. And so think about when we're at home, and when we put our mobile phones on a desk or charging, or when we're at work, we're putting our mobile devices on a desk or in the pocket, but we're constantly moving even within these in our private spaces. So some of the use cases could be I'm walking around in my own house, doing laundry, getting things done, cooking, and entertain with my family, but I still could use voice assistants on the wearables that I have always on my body to get things done and gain information, pull up recipes starting a timer when my hands are dirty or for or, you know, putting on music, through voice interactions from my watch to different IoT devices. So I think there are so many opportunities, even when we are at home, but we're not stationary, right, we're still on our feet and on the go. And then the third bucket is very special to wearable devices that are fitness and wellness. That's because I think fitness and wellness are one of the biggest value add to wearable devices. So really like to zoom in on this literally, on the one use case where were wearable and unhireable devices can function as standalone devices with A user can feel comfortable leaving their phone at home when they're gone for a run. And use voice interactions with their hair one wearable device. It could also be when you're in a gym and put your phone in the corner, and then go attend a group hit exercise and use your wearable and portable devices. So, so if I think about these three bucket and I'm boiled down a little bit, I think the common thread of three buckets are the state of multitasking. So if we think about all these use cases, and that users are all the go use cases are all trying to get things done or get more information while they're doing other things. I think that's a common thread that kind of ties all together is on the go use cases. We don't have users for attention. So I like to take that into consideration when we're designing for voice interactions for these use cases.

Lisa: Like, I'd like to build on what Jesse said about the distracted use case and multitasking. I think that's one of the most challenging things about designing for voice and it's not just on the go, I think just in general, like if you find it realize that usually your folks that are using a race user interface are distracted. So one of the top use cases is in the kitchen, right? Like 50% of people's echoes originally, I'm not sure if that stat has changed, but it used to be 50% of echoes were in the kitchen. And so people are, you know, they're using them for things that they could use have washed their hands and done with their hand. But they're active, you know, their hands are dirty, or they're busy touching something or topping something and they're using their voice. And so I think that the both and on the go, but just in general and voice, we always have to be really sensitive to multitasking that you should expect that the user is slightly distracted while they're using your voice. And if you notice, it's very different from screen-based interactions, and that's why things get kind of interesting when you go multimodal with a screen and you have a different level of attention and you're using touch, but generally no one I like to talk about like phone UX. Sounds are designed to be immersive, whereas voice systems are not if you think about like you can go to a concert you can be or a bar listening to the music and enjoying it and still doing 10 other things you're drinking, you're playing a game or you know, you're talking to your friend and so there's a lot more multitasking and audio than there is. In when you're immersed in your phone, and some other use cases that didn't come up obviously fitness is a big deal like I mentor the woman who designed that go buds she's terrific designer had some really interesting challenges because you have a tap interaction as well to add on top, you know when you answer a call and so but you were very limited, you know, when you do taps in terms of what you can do, right? There's only so many taps and there are two sides of your head and that's pretty much it. So, but fitness was a big consideration there. So how do you have sort of a personal trainer in your ear that is very you know much encouraging you on your run or telling you how your time is Are all that stuff I also like to think of on the go. As you know, sometimes the standalone device like Jessie was saying that you know, sometimes you can step away from your phone. But sometimes they're really connected to your phone. And they're basically accessories. And so they're kind of are always interesting. Questions are, and we'll talk about this with some of the technical challenges, I think later around connectivity, form factor, you know, processing power of that device. And so sometimes you're limited in terms of what you can do, based based on all the all those technical limitations. The other thing we talked about in our little group, which I thought was interesting was a translation, potentially is, you know, because there are not just wearables, like watches and bugs. There's also the frames. I'm really interested in how we could integrate translation into frames. I just think that's it. For me, it will be a terrific use case like I spoke French. As a teenager, I was like when the french fries I guess great French, and now I can't understand anything. So if I were to go to Paris tomorrow, I would be a real pickle, I could probably read a couple of things. But in terms of understanding, so. So I think translation is, you know, if love or be able to travel again, I think would be a great use case for me.

Rupal: So I was actually going to add one more point to Jesse's great framework. I think the framework is a really great one. But the other thing I'd add to it is another dimension of whether it's in real-time that you're looking at the analysis of the voice and kind of looking at how a person is interacting with the device, or longitudinally and I think that's the one beauty of these interfaces is that because they'd be on you as wearables, you'd be able to track over time, not just press sexually, which I think is a really exciting new dimension. In terms of accessibility use cases, I think we'll get to that a little bit. In our world. One of the things that we talked about in our breakout was also language and but also lifelogging. And I think that goes along the lines of the longitudinal, and specifically on both ends of the spectrum. So you know, when kids are young, they're looking at language development and sort of using that as a way to memorialize that part of development as well as on that latter end, where you're talking about having the memories of your loved ones for longer, and I put in voice banking as well, given that you can save that voice and we created an artificial methodology.

What are some of the well-known devices and products for hearables and wearables?

Doug: I could start by talking about some of the different form factors of wearables and wearables. And I think, you know, the most popular use cases might be slightly different for each. So I, you know, thinking through the list of, you know, on the go form factors, I think Polina mentioned, certainly the car use case, you know, now that we've got CarPlay and Android Auto out there, I think you get a lot of rich functionality from your phone more accessible on the car. And so, certainly, navigation is a very popular use case for that kind of, you know, form factor. You know, there's, of course, smartwatches, hearables are really a sub-segment of wearables and kind of refer to, you know, headphone type of form factors earbud form factors. And you know, for those who aren't familiar with the term AI that really popped up a couple of years ago in some media, really suggesting that hey, look as, as headphones are really pushing into, you know, Bluetooth foreign factors with smarts in them, you know, that they can do more for users than they used to. And so, you know, for the hearable type of form factors, you know, I think there's lots of use cases where, you know, especially people in the cities, you know, they're, they're on the go, they're walking, you know, maybe they want to You know, call up the music that they're listening to, you know, call a friend. You know, they knew how their hands fall. And so, you know, it's a really great form factor that I think lends itself well to

you know, a voice UI. You know what one of the most exciting new form factors to me, you know, bows a couple of years ago came out with Bose frames, you know, so some glasses with audio built in their Bluetooth based as well. And I think the neat thing about those compared to earbuds or headphones, you know, earbuds and headphones are really devices that are more isolating. You're kind of give a social cue to other people that you're not listening to them. It's harder to interact with people. But you know, Bose has received a lot of great customer feedback, I think there's a lot of excitement, again, among consumers about that form factor where you can still be fully aware of your surrounding. You can, you know, talk to people, but then you can also, you know, listen to audio beyond a phone call interact with a voice assistant. So definitely, I think that's still a small part of the market, but has a lot of potentials to grow in the future.

So for these conversational AI on the go. Some of them use third party assistance that we're already familiar with. So for example, you might Have Alexa on your smartwatch or something like that but then other companies are making their own assistance so what is the trend right now?

Doug: It's an interesting question. Certainly, Bose has gone down the road of adopting even before we did google assistant and Alexa integration, Bluetooth did offer, some kind of rudimentary voice, the recognition that would call up Siri on your phone. But I would say what we did with the Google Assistant and Alexa interfaces kind of took it up a notch and brought a more sophisticated interface for users. But it is an interesting question to ponder about, you know, for device makers like Bose, how do we differentiate? Because, of course, Google and Amazon win when they're on every single device. So, if we put in all this development effort to get on this on our headphones, and then everyone else has it, how do we make our device special? And I think, finding a niche where we can improve the utility for devices I think is something that's really interesting. One way to do that would be, let's create our own voice Bose voices system and I don't think Bose while it's not a small company, we pale in comparison in size of Google and Amazon so it doesn't necessarily make sense for companies like both are other companies to try to duplicate everything they can do. But, they could go after a niche play? Or, the question is whether, in some of the big players like Google and Amazon, can we extend, what's been done there so that we can customize it like the skill framework. But I would say that the skill framework has been built out in the home space, but on the go, I think we're still catching up there. And, that's maybe an opportunity.

Jessy: I think it'll be fun in the future if the assistants can talk to each other. For example, I can say, Hey Siri, can you take care of x with Alexa? That would be really interesting. But just on the one side, I think it is difficult for a smaller company competition will try to

duplicate the whole capability of Google Assistant or Siri or Alexa partially because the increasing the value may be in the future trend isn't so much as assistant as a feature but more as a system as a tool. So I like to think about it Assistant is not the destination, right? Maybe in the beginning assistant is somewhat novelty. So people like to chat with Siri and or ask Google Assistant to tell them a joke. But really the assistant is for it to be, be more useful. It needs to be more a tool. So it's not about assistant it's about a timer, right for example how does the system get me to set my timers with my hands free or how does assistant get me somewhere else or get things done faster for me? So to do that is to be able to leverage the power of the ecosystem is how, how does a system extend its legs or into all the surfaces and surfaces and the services the platform has and how integrated it is. More Powerful any assistant could be to be a powerful tool. Because if a system becomes too, too much silo that is a feature. It doesn't add it, it can add too much value into helping, helping user gets to do how to get user guessing to do get things done or get things done with their favorite third-party app they're already using. I'm not sure if that completely answers your questions.

Rupal: Yeah, I think you know, even if you take the assistant you think about parts of the system. So either the language understanding or the speech synthesis for us as a third party speech synthesis that provides a custom voice, you know, when you're in a skill, and if all of the skills that you have all sound like Alexa or if you're competing skills, like let's say you're pizza joints, and you're ordering food, and you don't know which one you're asking today, because the voice is all the same. I think that's one of the things is initially we had just like one-offs, right. And now we have multiple versions that we need to start differentiating. And today, you know, you can have another voice, but it's often pre-recorded and can't write, you can't use a text to speech service inside these standalone assistants to be the voice that comes and I think, obviously, you can change the voice, you know, native to Amazon or native to Google, but you can't have a third party text to speech, which I think it would open up a lot, especially when you think about doing domain-specific kinds of tasks like, no matter how big the teams are, where, you know, in these bigger companies, there are going to be specific niches where I think there might be an advantage to having the uniqueness of whatever these companies do to have their unique brand identity.

What are the challenging aspects of designing for conversational AI for on the go use cases?

Jessy: first of all, I think that depends on the product we're talking about. The challenges could be different. So we kind of mentioned here, Bose wearables, and in-car experiences, those are very different types of products. Some are voice only, some are voice forward, some are visual forward. So I think the challenges are or could be very different. So maybe I can take a stab at from the wearable specifically smartwatch perspective. So when you think about challenges, then it really comes from the unique aspect of the smartwatch interaction model. And so, from our experience of designing for the smartwatch, we realized that the interaction model is very different from other device types, especially to mobile. So for wearable devices users interact with wearable devices much more frequently during a day in much shorter sessions. So for example, a user might check their phone may be from 10 to 30, 20 to 30 times a day. And maybe an average of a fine from five minutes to hours at hands. Because flow is really good at suck user in you could check a notification and end up watching YouTube video five hours later. That was watches you know, some other research found a user who wears you know, obviously if you have to wear watches all day could check up maybe up to average 150 times a day, check their watch, but the interactive session is very short. It's maybe from five to 10 seconds per session. So that's very short but frequent interactions. So, I think the challenges of how do we design voice interaction, conversational experiences for these frequent and in short interaction models? And how can we add value with voice interaction with these shorter sessions? So I think that's point one. And then point two, I think one of the challenges is that not everyone is always comfortable speaking or continuing speaking. So depends on where you are. Some people obviously feel more comfortable using voice interaction in private space versus public space. And so, so when we think about getting things done with voice interactions If a user has to spend five steps to disambiguation, to their intent and then be able to actually finish a task, they're not going to do it was voice assistants are wearables? Because if you think back to all the use cases that we talked about, like a chatbot on a screen that users have for attention to users now going to talk to an assistant for five minutes for on the go use case. So I think that's, I think that's how most of the challenges I encounter designing for voice interactions.

Doug: I think Jesse hit on a good one. With, privacy concerns on the go, it's very different than you're in the home interactions. So as we introduced voice UI into Bose products, and in particular, the noise-canceling 700 headphones, which I'm wearing right now, were our first headphone product to introduce wakeboard support. There's a lot of debate about someone out in public, are they actually going to be comfortable using the wake-up word? You know, are they going to have concerns about someone else triggering it? Or, I'm in a cafe, and am I going to have confidence in using that, or are they going to be more apt to when they use a voice, visual device, or a kind of button interaction. So that is definitely something I think in the interaction design and use cases you have to think about that sensitivity. You know, another aspect that I think you have to be cognizant of, I encountered this, actually, before we even did voice assistant integration, but we were just introducing kind of voice prompts into some of our interactions, you know, five, six years back on Bose headphones. And, you know, it was a delightful experience to have that richer, someone telling you that, hey, now you're connected to your device. But you got to be really careful not to be too pushy about those voice notifications or they got annoying really quick. And I think there are some of the more interesting voice assistant use cases I've seen for on the goes You know, getting in the calendar notifications, you know, a lot of times, maybe immersed in music and missed that, it's time to jump on the call. So the utility can be really high to get interrupted by a voice and say, Hey your meeting is coming up. But if those notifications are coming in too frequently, very quickly, the user is going to get annoyed, they might disable the assistant and now you've lost the user. So I think, you've got to strike the right balance there to keep the utility without being annoying to the user.

Lisa: So I think that you know, productivity and being unobtrusive in a passive device that you can go up to, versus one that you're wearing or carrying around with you is a very interesting point that it's something that we should think about as designers. When is it appropriate to be proactive? I think is a little bit different on the go.

Can you give us a baseline definition of accessibility and tell us how accessibility and design interact in these wearables and on the go spaces?

Rupal: I think about it is that where there's sort of two ways to think about what an accessible piece of technology is. One is it's able to augment someone's abilities, and the other is the other end Where you can supercharge or superpower abilities that we don't even humans don't normally have. Right. So on the accessibility side, we think about large populations in terms of visually impaired, hearing impaired, you know, practically, maybe sort of somehow not sensing though so I think those areas have been largely studied as sort of smaller groups of people, the visually impaired group being the probably the largest of the disability group and also usually the most able to advocate for their needs. I feel like with hearing impaired there's always been this tension of, are you? Is it a cultural thing because some people who are deaf oftentimes don't want that additional accessibility sort of feature because they are proud of their culture. And then the one group I think that is often missed is the Motor impaired. And that's because there's so many slices or so many reasons for why people could be motor impaired, beginning from childhood disabilities like cerebral palsy to later in life like ALS, right? And so because the populations are not competitive and they have disabilities at different times in their life, often the power to ask for certain features and the technology often isn't there, they don't have literally don't have a voice in many of these cases. So I think on that end, we really need to be thinking about how to augment the ways in which they're going to be able to access this technology. And often it's not just one methodology, because the heterogeneity in these populations is huge. When you're talking about people with visual impairments. You have people that can't see, you know, small print, but they can see big print. You have people that can't see at all like you can't or some who can't see color, right? So there's a huge range and disabilities or abilities, let's call it and so I think we need to be thinking about it. How we give more as much flexibility and multimodality so that if you don't have access to one modality, there are ways to get to the devices in another way. I'm on the superpower part. I think this is really exciting because sometimes if people have an inability or a poor ability on one side, you can supercharge them, and another you can almost make them compensate for that, which I think is fascinating. And I should just read about a study that was done recently in Pittsburgh where they had a sensor that was a it's not a hearable, but it's inserted into the figure and what it is because there is access to the biggest nerve which is one of the 10th cranial nerves, and you can stimulate it transcranially, and essentially, it's a tiny little almost like it's not a shock, but it's a tiny little nerve activation. And they're using it to trigger learning or to improve learning and what they found is when they actually trying to teach these English speakers to speak Mandarin, that they were able to kind of correlate learning that word with this signal. And people learn that much quicker. It sounds like a completely different area, right? But you're starting to think about ways in which sensors are becoming tinier, and tinier and able to be part of the wearable fabric. I've worked for a long time with a group that has been doing some biosensors, and some, they're called surface-based EMG. So EMG stands for electromyography. So it's a surface-based for the muscles of the face and the neck to actually do silent speech recognition. And then, of course, you can go from silent speech recognition to augmenting one's voice as well. So I think that there's a lot of possibilities when we don't just think about individuals who have specific disabilities and you start thinking more broadly. I mean, almost every new technology that we use today has come because there were groups of people that needed it, if you think about hearables is a big huge market came from individuals who didn't want to wear their hearing aids that that really hurt. They were so hard. Now it's Apple's iPod, you can put them in there, they will, you know, fall out, even when you turn your head, here they are, whatever. And that's fantastic. Because you get these amazing technologies. And so many people just put those away in their bedside drawers. And I think that that's a real missed opportunity because we thought about the technology. But we didn't think about the interface. And I think the same for voice. I mean, people have assistive technologies, Stephen Hawking, all sorts of people with speech disabilities have used devices to talk. But oftentimes, even when it's such a critical thing, they don't use those devices because it doesn't sound like them. Right. And so I think that there's a lot of opportunities here if we open up you know, the, our aperture to what is possible, not just for those populations but universally.

Is voice the best input or output for all interactions on the go, and what are other inputs, outputs that can be used in conjunction?

Doug: I think the short answer is no, voice is not always the best input. And, you know, we debated this a lot, as we were talking about, wake up board enabled headphones. Should we still support a button to talk with? And, after a lot of debate, we kind of decided, yeah, it's important. You know, we were excited about enabling wake up word for voice assistance on our headphones, but, even for the same person who, who naturally likes that, there may be some situations where you want to be a little bit more discreet about it, or you're in a noisy area where it doesn't work very well. And so, having some sort of button is a really good thing and for really complex interactions, there's still no replacement for a screen. So, the certainly the button user interface is really limited on headphones. And so that that's pushed a lot of kind of hirable or wearable device makers to create mobile apps. If you want to go in and manipulate settings or do some more sophisticated things that can augment your device.

What are different considerations for different inputs and outputs, to supplement voice or replace it?

Lisa: I think one thing that we don't talk about a ton is gesture and haptic and I think that's something that like you know, I keep hearing things that you know, One year at South by Southwest was talking about a wearable like an outfit work with integrated with directions and you would actually get like a tap you know, when it was time to Right, tap on your right shoulder tapping the odd. I mean, that seems kind of like far out, a little bit too much for me maybe like sort of telling you what to do.But I do think like haptic and, you know if you think about some really cool, gaming often leads technology breakthroughs, right like, you know, think of fortnight and like you know these things that push the envelope of what our technology can do. Some of the greatest voice sound effects and visual effects are through game.

Jessy: I just want to add on to what Lisa said if we think about kind of the tool toolkits in the toolbox of the responses that voice or conversational experience have. Those are, first of all, there's the visual response and there is the speech response text to speech, and then there is some effect, then there's haptics and I think one thing that could be explored more is how does all these response tools work together to give like the perfect response for a user when they are doing voice or conversational interactions on top of that, we could think about the combination of devices that one person has on them so it could be wearing a smartwatch or someone could be wearing a smartwatch paired with headphones. Should that response be handled differently? Probably I think that it hasn't been worked out really well. Partially additional technical difficulty we think that for example, if you pair your headphones with your we're still phone and people would generally do that. It just can't seamlessly transition between connecting to your watch from your phone, so, but that makes it hard, but I think that's an area that we could explore more on how those response mechanisms can all work together to, to inform user.

How do you deal with low connectivity and processing power on devices?

Doug: I would say that's, that's one of the major constraints. I think I'll start with processing power. First, you know, these are small devices, especially as you get into like earbud, form factor hearables, or even frames. And users are increasingly demanding, kind of all-day use. So, that drives, the processing power is small, so it doesn't suck up a lot of battery life. But then that does create difficulties for how do we enable these rich interactions. And I would say that there are some devices that do direct kind of Wi-Fi connectivity, but I would say those are kind of the exception rather than the rule. So a lot of them are going over Bluetooth, at least in the wearable space to the phone. But then, often we're relying on say that Google Assistant app or the Alexa app running, and if that's not running, then the experience falls apart. So I think, we are at least able to leverage the communication link on the phone, which you know, can be spotty, but is generally reliable, but definitely, it's something you have to think about. In the interaction design, when this fails, what is that experience? If I'm not able to get connectivity to the phone or the cloud, I need some local feedback to the user so they can understand what happened and you know, there's some way to correct that. So it definitely needs to be kind of top of mind. I think designing things you can't design things in the exact same way for the on the go space, at least in the smaller form factors as you can for in the home where you don't have to worry about those constraints.

How do you gather info to get your use cases for the conversation design? If this is the first time a product is launched?

Rupa: I think there's a lot of General design principles in terms of doing, you know, low fidelity prototypes and trying to mock it up a little more into the iterative cycle of going from, is it really an itch? That's a big, huge need? Or is it kind of like a nice to have, right? And how ready is the technology for that?

So adding all the customer discovery

part that happens in the general kinds of usability, design or user design, user-centric design processes that take place? Recently, we've been looking at some ways to do

it, use these custom voices that we're building in in more for a broader audience or a broader market audience. And we've been testing it, in the same way, to really homogenous groups of smaller users, rather than trying to have super heterogeneous users because you're then going to get a little sprinkling of data like when you're doing data analysis of this type, you have to make sure especially if it's pretty exploratory that you can get some information out of this Write that it's going to stick because the worst thing you do is you do a study with 510 whatever number of people you find out to people, like if five people don't, you know, it's like, it gives you nothing, right? But at least then you can start to segment the market audience. So best guess, and then test them iterate.

Have you thought about getting information about use cases before you've made a product that even exists to be tested?

Jessy: I think a lot of ways it's kind of very similar to early concept validating testing, that to be lower fidelity and the rough. It could be sometimes lab studies with smaller sample size or it could be broader audience things like asking for like a more longitudinal study was surveys kind of sort of a journey, way too to discover how does a feature work in a more expanded timeline or sometimes it could be getting into people's home and see how they use certain products in their physical

space. So but in general, those are the kind of the common toolkits we have on the UX research site for concept validation, and then design and iterate. And then when we have a more concrete product, and then do more research on execution and usability.

Curated Resources By Voice Tech Global

In this section, we have highlighted all the articles that have helped us put together the initial set of questions for the panel.

Readings

Hearables and the real on-the-go Assistant we’ve been waiting for: An outline of the on-the-go assistant by Voice Tech Global co-founder Tim

Vinci: Kick-starter campaign for a standalone smart headphone including a smart assistant and touch. (The product was discontinued)

Hearables: Outline of the hearables, the challenges, and the opportunities.

Assistant Interoperability: A follow-up on the conversations around how assistants can work together.

Earbuds to learn new languages: An article and research paper on an experiment to learn new language with the help of earbuds.

Curated Resources By Voice Tech Global

In this section, we have highlighted all the articles that have helped us put together the initial set of questions for the panel.

Readings

Hearables and the real on-the-go Assistant we’ve been waiting for: An outline of the on-the-go assistant by Voice Tech Global co-founder Tim

Vinci: Kick-starter campaign for a standalone smart headphone including a smart assistant and touch. (The product was discontinued)

Hearables: Outline of the hearables, the challenges, and the opportunities.

Assistant Interoperability: A follow-up on the conversations around how assistants can work together.

Earbuds to learn new languages: An article and research paper on an experiment to learn new language with the help of earbuds.