Executive Summary

Meet Our Panelists

Saadia Gabrial

Ph.D. Student @ University of Washington

Saadia Gabriel is a PhD student in the Paul G. Allen School of Computer Science & Engineering at the University of Washington, where she is advised by Prof. Yejin Choi. Her research revolves around natural language understanding and generation, with a particular focus on machine learning techniques and deep-learning models for understanding social commonsense and logical reasoning in text. She has worked on coherent text generation and studying how implicit biases manifest or can be captured in toxic language detection.

Louis Byrd

Chief Visionary Officer @ Goodwim Design

Louis Byrd is the Founder and Chief Visionary Officer of Goodwim Design, a hybrid-design studio dedicated to licensing original ideas and socially responsible technology to companies in need of novel breakthroughs. Louis has set out to build a world where equity is the norm so that people everywhere will have an opportunity for success. He believes that technology is only limited by the imagination and life experiences of the creator.

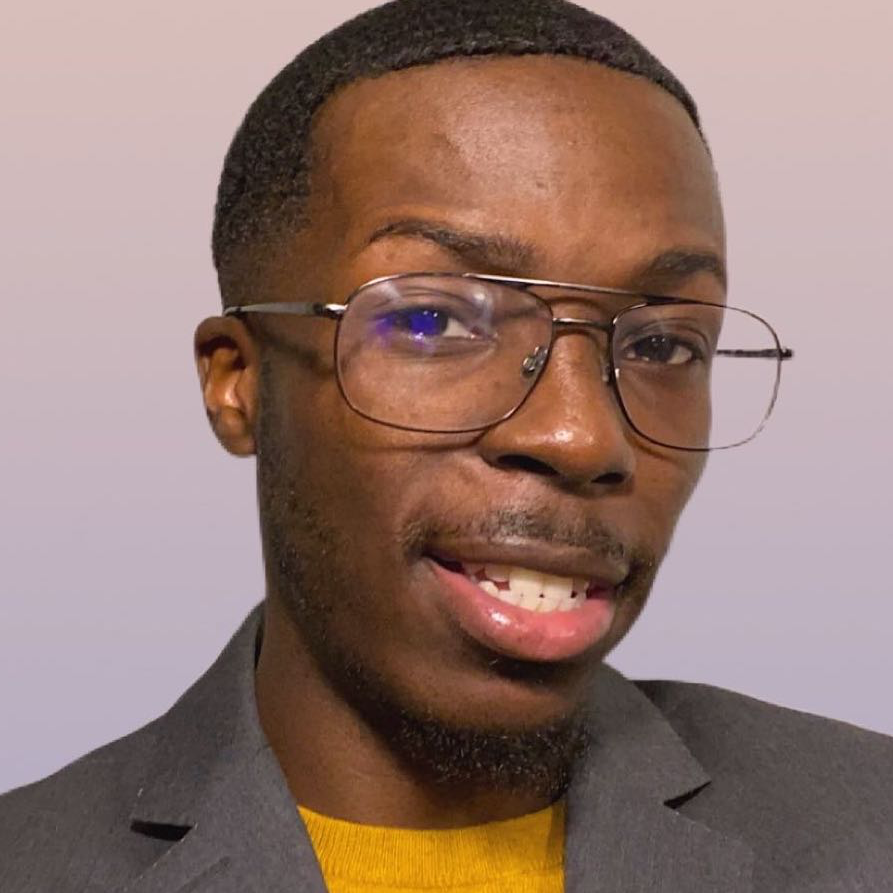

Jamell Dacon

Ph.D. Student @ Michigan State University

Jamell Dacon is a second graduate student of Department of Computer Science and Engineering at Michigan State University (MSU).

Jamell completed his M.S. degree in Computer Science in Spring 2020 at Michigan State University, his B.S. degree in Mathematical Sciences in Spring 2018, and A.S. degree in Computer Science in Fall 2017 both at The City of New York - Medgar Evers College (MEC). Jamell was awarded a University Enrichment Fellowship (UEF) by the Graduate School at MSU (2018-2023). He joined the Data Science and Engineering (DSE) lab in Fall 2018. His research interests include Machine Learning that is primarily focused on developing efficient and effective algorithms for Data and Sequence modeling and applying them in fields such as Bioinformatics, Recommendation Systems and Natural Language Processing. Jamell not only concentrates on increasing his academic skills, but is very passionate about Community Outreach, Diversity and Inclusion, in that, he was awarded a Graduate Leadership Fellow Position by the College of Engineering.

Charles Earl

Data Scientist @ Automattic

Charles Earl works at Automattic.com as a data scientist, where he's involved in integrating natural processing and machine learning into Automattic's product offerings (among them WordPress.com and tumblr). In that capacity, he is passionate about building technology that is transparent, equitable, and just. He has over twenty years experience in applying artificial inte

Watch The Event Again

Event Q&A

I wanted all of you to tell me about an anecdote or the moment where you either realize or experience the bias into natural language processing Or any conversational AI products?

Saadia: Yeah, so the experience I have to share of implicit bias is a project in NLP that I started working on pretty early on during my time in grad school, where I was looking into toxic language detection and social media posts, specifically Reddit posts. And so for that project, one thing I was exploring was how to fool deep learning classifiers into thinking of benign posts post that doesn't contain any toxic content was toxic, and vice versa. And initially, I thought this would be a really challenging task. And that, you know, these models seem to perform fairly well and toxic language detection data sets, and so it should be fairly easy or fairly hard to trick them. But it turned out that a lot of these toxic language classifiers are easily swayed by keywords. So, for example, if there's a phrase that says something like "I'm a black woman" or "new family on the block is Muslim" thinks that that has a higher likelihood of being toxic just because it contains this keyword that has information about a demographic that's likely targeted by toxic post or by somebody with malicious intent rather than actually being a toxic post itself.

Charles: There are a couple of systems that work with that. It's been apparent in but kind of the most blatant example of something That could really harm or offend people was a domain name, recommendation system that we had filled it. And so lots of people, when they get WordPress accounts want to set up their website and want to kind of custom domain. And at some point we hit run a service, you could type in, you know all about Bs, something like that and just get something that a domain name that match did or at least was semantically close. And as part of just monitoring the system, we would occasionally do checks where we, you know, just type in arbitrary words and see what happened. And we noticed pretty every couple of weeks that we would run these samples and Would invariably come back with a word quite offensive. One example that stuck out for me is that one person was trying to get an LGBTQ advocacy site and it came back with an offensive term that there was no doubt it was very transphobic. And when this kind of sticks out, because when you're actually trying to build a product that's based on natural language processing, and real users are trying to do something with it, you kind of get the sense of how this kind of harm can impact people and impact your bottom line basically.

Jamell: So, one example that I had I was doing a project a while ago that did parallel context data construction. So this is where you have gender words like males and females you would have he, she, husband, wife, Mr. And Mrs. stuff like that. And then race pair words from standard US English and AAVE, AAVE is African American Vernacular English. So, It's a set of words that belong to the African Americans, usually typical language where you speak in like Ebonics, and so forth. And we fed these race pair words and sentences, regular sentences, and we ran some fairness tests on it. Like, he has a really nice smile and the sentiment was positive. And then we ran, we changed. We change the word he to she and then the sentiment was negative because they respond something like her smile is cute, but she's evil. And I was like, why would it say that like it just race-pair words. In terms of race-pair words, we changed the word this like T-H-I-S in a sentence a regular sentence and in AAVE, this is shortened to D-I-S right, just regular sentence. And it responded offensive with like drugs and like other offensive stuff. And I was really confused as to why a single word made the dialogue system respond in such a way.

Louis: Yeah, definitely. So my stories come in more so from the actual user experience side, not so much under the hood. So recently, my wife and I purchased a Samsung Smart fridge, which is the AI is powered by Bixby and basically, what I discovered was a part of this could be because I'm from the Midwest here in the United States. So are we kind of have a certain sound in our tone in our dialect that kind of comes out especially on the east side of Kansas City, Missouri, where I'm from, and I noticed one day when I was trying to interact With the AI, interact with Bixby. I was trying to talk to it and Bixby never understood anything that I would say. So you know, something as simple as saying, Can you tell me what's on my calendar today it will tell me the weather of some country across the seas. So that was confusing. And then one day, I was just joking around my wife and me and I put on my white man voice. For those of you in the US, you know exactly what I'm talking about. And as you know, I kind of changed my tone. And I really turned it up a little bit and just kind of did one of those numbers. Then all of a sudden, Bixby knew exactly what I was saying. So I start talking to the device, and whenever I put on my white man voice, it answered the questions with pure accuracy. But whenever I talk with my natural tone, it just didn't. It barely understood me. So the scientists in me wanted to experiment more. So I have my wife. Try it and see the same thing happened with her. So that's kind of where my interest in conversational AI came from.

Where do you think or what do you think is the main reason behind those issues of bias?

Louis: I believe that the main issue behind many of these issues kind of goes back to the source. And what I mean by that is, where's the information coming from? You know, I think it's easy to say that it's the developers or the designers who are exhibiting certain biases. And maybe that's true. But you also have to ask the question of, okay, when they're learning these machines, where does that data come from? And is that data skewed? Or, or does it have a very limited range of who they're trying to are, excuse me who they're basing their, their data inputs off of? So for me, I think the issue comes back to the inherent biases that exist from the onset, whereas data coming from who is that data tailored around from a cultural perspective, ethnic wise perspective, and all those things.

In the case of toxic language detection, the data on these models that you use. Where and how have they been trained? What language do they use, is the point that Louis making around the cultural impact into the data set true? Going back to your research on that specific topic.

Sadia: Yeah, so I believe, to some extent, then that you can boil it down to the data. So one thing we did find that there are dialect biases, as well in toxic language detection. So we had a paper where we're looking at African American vernacular English, in particular, and found that toxic language models are biased against that particular dialect. And that likely is partly just because there's not as much of that data in current training sets because a lot of the researchers who are collecting those datasets are doing this work previously may not have been thinking outside of a particular cultural perspective. And, you know, the lack of diversity in computer science leads to this kind of disparity. But it's not just data, because there's also been work that showed that these machine learning models themselves can amplify these biases that are already in the datasets themselves. So if you have some slight bias, then the machine learning model is going to learn to amplify that and reproduce that bias on a larger scale. So it's not just a data set problem, unfortunately.

So we say there are not enough data sets. You mentioned that so we do have AAVE data set. So how come we still have these issues?

Jamell: So, one thing I like to mention is not also there's the AAV E also, there's a word embedding problem where most of the models or the popular state of the art models normally map men to like working roles and women to like traditional gender roles. If you're doing language modeling and translating from Spanish and you go from the word doctor, doctors immediately put towards men or map to men. And you have female words that end in a so they do not translate the same if you're going from English to another language because you have male and female words like that. In terms of AAVE, you don't have like. Well, I haven't come across like datasets that are fully AAVE they're scraped sentences from Twitter and Reddit. And there's a bunch of slang and there's a lot of pre-processing to like put into the model. So it's like a lot of work for developers and people who create these models that try to avoid these gender biases or racial biases. And it's like too much work. So they just normally just take the text from newspaper articles, say like the government when they put out these on, say, like mass messages. So it really affects the African American community.

How do you think what would be a good way to communicate and make people aware of these challenges?

Charles: I'm glad you asked. That key that I'm trying to use the whole moment as a way to get a lot of those discussions going with you know, the movement for black life protests that have exploded not only in the US but around places in the world. A lot of technical companies in particular, even if you look at the audit that was done on Facebook, that's just been posted a lot of places saying, we really have a problem. And so, one thing that I've done is try to say that we all collectively the company, have an interest in making the models correct and looking at what harm they do. That we should have a discussion that involves the legal team, the developers, data scientists, everyone around what our company values are. And then from that point, we can make decisions about this vocabulary system or for this conversational system, what data should we really collect? How should we evaluate it? What error functions or lot cost functions that we use to make sure that the impacted communities are not suffering. Okay. So I think that it begins with a company-wide discussion, what are the values and others values manifest in what the conference system with the AI is doing.

What is your discourse? How do you approach? What is your way to bring that motion and willingness to go and make something thing, which is a much more inclusive and socially responsible?

Louis: That's a great question. I believe that Charles has pretty much hit the nail on the head right there. It starts outside of the products outside of the technology, it starts back at the actual business, what your mission is, what your goals are, do you actually care about it? You know, a lot of companies are doing a great job right now with the lip service around it. But the question is, do they actually really care and want to actually make the change? And that comes down to basically them holding themselves accountable but also taking the necessary steps to be self-reflective as an organization and ask these tough questions. Well, where does bias exist within our organization? There's a lot of ways that that can be looked at. I think that A lot of times there's this concept of social corporate responsibility. And we've been seeing that a lot lately where companies are donating crazy amounts of funds to the social justice causes. But then the question becomes, okay, what are you doing internally to actually try to solve some of these challenges? What are you doing to look at your technology and your output? For me, I think it comes back to them being, taking the time to self reflect, to do the work themselves and to try to understand so when I approach a company, that's typically what I do, the first place I start is more so trying to understand where are you at today? What is your position on the bias? What's your position on inclusivity and culture and then really try to analyze that and then see if it makes sense where we can move forward together? And where's the opportunity to change in your company?

What is Natural Language Processing (NLP)? And what are some of the main issues in there that bring that bias into larger products which embed the system?

Saadia: Yeah, so for natural language processing, it's mostly centered around understanding and potentially generating natural language. So when you think of natural language, it could be a dialogue. It could be a story, some part of a chat, those are examples of natural language, and so on. Some of the tasks within that are classification, which toxic language detection fall under these generation. So if you think of a chatbot, then if you're having this open dialogue with that chatbot, then that would be a generation task. And the cases in which this you could have some bias that manifests include examples, like we talked about before, where somebody has a particular dialect, and it discriminates between that person based on that dialect. So it might give them only certain answers or answered their questions differently or not understand them, because of the dialect and also cases where you might have a particular decision made based on some attribute of that person, whether that be a race or gender ethnicity. So for example, and when natural language has like machine translation, you might have a bias in embedding this where it would predict that a nurse is Female or generate something about a nurse being female in Spanish or French in languages with gendered words versus a doctor a male.

How do we detect the data set that has the bias? How do you decide to pick the data set and the techniques that you use?

Saadia: Yes, I think one concrete example the start in terms of mitigation. So one example that doesn't require actually collecting data itself is to look at how the models behave in different cases. So, if you have a model trained on the particular data set and you want to learn about what biases that model may have, you can say it's a generation model or classification model, you can provide different inputs and see is it showing the same behavior and the input with this particular dialect or this particular lexical keyword versus an adversarial example? And if it seems like it's giving an equal distribution to people based on race, or gender, ethnicity dialect, then that indicates that this is a more unbiased model versus if you have cases where you can clearly see that the distribution of the outputs it's giving is not equal.

Jamell:So one thing that I would like to mention is for this type of question, who would use fairness measurements? For Fairness measurements you have like sentiment analysis or attribute words for the attribute were to have seen like for categories where you have pleasant, unpleasant career words or maybe even timely words. Sadia brought up a great point about like, actually words in respect to careers where you say he is working in a hospital to a dialect model and go like six over 60% of the time the dialect model would produce a response of he is a doctor. If you said she's working in the hospital, it's she's a nurse or a secretary, where in fact they both can be doctors and surgeons and so forth. You have politeness or different types of fairness measurements that you can test to see what the dialogue model outputs.

Can't we just get machine learning to fix itself to resolve bias? Can we get the machine to just fix itself? Is there an avenue for that?

Jamell: It's a hard NO for the fact that people are free to speak however they want to speak. And creating these datasets, like Charles was mentioning is how the user is using. The models are using the application. See, like, there was a chatbot that was released a couple of years ago that became racist in a day. It just, it was supposed to respond saying, hey, you see it says Hi, how are you? It responds, it responds, and it became racist in a day. People were feeding it a bunch of racist messages and it responded offensively it learned how to use profanity. It was really interesting to watch but Twitter had it taken down. So I say you can't get machine learning to fix itself. However, you can have it do better by adjusting algorithms by like constraining predictions. You can learn gender-neutral embeddings, adversarial learning by adjusting like discriminators to see how well it can perform when it's dealing with, as Charles mentioned, these on accounted for examples that were not accounted for.

What are some of the work that I can do to help mitigate the issue of bias when making a product?

Great question. Um, maybe this is a little New Age Thinking of my but I believe wholeheartedly that designers have to take a very empathetic approach to when it comes to designing and development. And what I mean by that is, it kind of gets back to what Charles was getting at, in a lot of ways. To me, technology is nothing more than a tool at the end of the day it is created by humans. And inherently us humans are flawed, but there's nothing wrong with that. That's humanity. Right. So with that being said, I think that in order for us to build better products to have better outcomes and deliverables, and again, approach things with more inclusion in mind, it takes, again, a lot of work. But when I say work, I'm looking at again to something I was mentioning earlier, is a Guy I know you said that it sounds like we're coming from a top-down approach. My opinion on it is that it's not necessarily top-down, I believe that it takes the organization to look at this entire business model from the top down to the bottom up everywhere in between. And in order for us to make the kind of products that are necessary that's going to allow us to build those more inclusive products, you have to really focus on asking the questions again, where do we exhibit bias, not even from just a leadership standpoint, but looking at the entire ecosystem of the business, and looking at the entire ecosystem of the product development. So again, getting back to what Charles is talking about. It's that concept of the tools is itself that that's one thing. But again, asking these questions, if I have this data set that's come to my table, or my terminal, whatever. Am I going to pause in a moment and take the time to look at and ask the question. Hmm, this is coming from Oxford data. That is predominantly white male that are a upper middle class. I'm giving a shout out to my man trolls. We talked about this in our breakout session. But uh, but basically it's looking at that data set and then asking the question, is this dataset skewed but to me, it's more or less about the data and it's more so about the human being conscious enough to say, let me ask these questions. And basically, to wrap that all up. My belief is that if, in order for a lot of this stuff to change, it's going to require more people to be aware and conscious and willing to want to do the work. I know that you kept talking about work, it is going to be work. But I mean, just focusing specifically in the US, in terms of focusing on some of the racial things that are taking place, we got 400 plus years of things that's kind of built up against this. So the past decade or two decades of AI being At the forefront are coming to the forefront. We got a lot of catching up to do so it's gonna take some work. But that's why I believe that it's very important for people to want to actually change and to do the work themselves to ask these questions.

How do you envision or how do you think we could move forward and what are some of the things that you see coming to help us take control and mitigate or get rid of bias?

Saadia: Yeah, so I think it's gonna take time. Of course, I don't believe that we should, you know, sit on our hands and kind of accept the way that things are. But I definitely think he'll take time to enact various ways of mitigating biases. And there's always the case of new biases coming up or, or the fact that we don't really necessarily are able to account for every instance that that bias may manifest. But I think some really concrete next steps that could be done are coming up with a universal evaluation metrics or frameworks for identifying biases in dialogue and chatbots and other ways in which it may manifest and pushing for more regulation of systems that are released. We need to think about the ethics of and the potential repercussions of these of deploying these kinds of products early on, even before it becomes an issue, and have more regulation, I think around that deployment and more consideration of what kind of tools are being put out in the market or what applications are researches.

Have our panelists or attendees encountered a black voice assistant chatbot?

Louis: I have not in real life but in the movie a little. They had it in there. I thought it was a good idea was called the chatbox or the AI was called sister.

Jamell: I've never seen it. I just think it's kind of funny but I feel like in the next couple of years there should be an intention to create one, you know, that works on AAVE as well as us Standard English.

Curated Resources By Voice Tech Global

In this section, we have highlighted all the articles that have helped us put together the initial set of questions for the panel.

Review of the bias definition and the strategies of mitigation by IBM

The origins of bias and how AI may be the answer to ending its reign: Jenifer Sukis share a timeline of bias and some of the solutions and strategies that have been implemented at IBM to mitigate it.

Case study of bias for NLP with consequences in the real world

Risk assessment for criminal sentencing bias: This Pro Republica articles relate the story of a tool used in evaluating the risk for recidivism and its bias toward African Americans.

Stanford study on bias in speech to text

Visualization summary of the study

This study from Stanford outlines some deficiencies in speech-to-text engines, it's interesting to review, especially following a similar study that was done three years before.

A thorough review of the process of building an inclusive / empathy first conversational AI product

Year long exploration of Conversational AI and human psychology: Evie shares a journey in understanding how conversational AI can connect with humans. In this thesis, many approaches and techniques for inclusive design are being used.

Racial Bias in Hate Speech Detection

Presentation of the paper at ACL

This paper describes how annotators can induce racial bias in hate speech detection.

Curated Resources By Voice Tech Global

In this section, we have highlighted all the articles that have helped us put together the initial set of questions for the panel.

Review of the bias definition and the strategies of mitigation by IBM

The origins of bias and how AI may be the answer to ending its reign: Jenifer Sukis share a timeline of bias and some of the solutions and strategies that have been implemented at IBM to mitigate it.

Case study of bias for NLP with consequences in the real world

Risk assessment for criminal sentencing bias: This Pro Republica articles relate the story of a tool used in evaluating the risk for recidivism and its bias toward African Americans.

Stanford study on bias in speech to text

Visualization summary of the study

This study from Stanford outlines some deficiencies in speech-to-text engines, it's interesting to review, especially following a similar study that was done three years before.

A thorough review of the process of building an inclusive / empathy first conversational AI product

Year long exploration of Conversational AI and human psychology: Evie shares a journey in understanding how conversational AI can connect with humans. In this thesis, many approaches and techniques for inclusive design are being used.

Racial Bias in Hate Speech Detection

Presentation of the paper at ACL

This paper describes how annotators can induce racial bias in hate speech detection.